Steps to use local models in Cursor.ai: Install Ollama, pull a model like DeepSeek or Llama 3, and verify the localhost connection. In Cursor settings, navigate to ‘Models’, add your local model name (e.g., “deepseek-r1:latest”), and ensure the OpenAI Base URL is set to http://localhost:11434/v1. This allows you to code privately and for free without API costs.

What Are Local Models in Cursor?

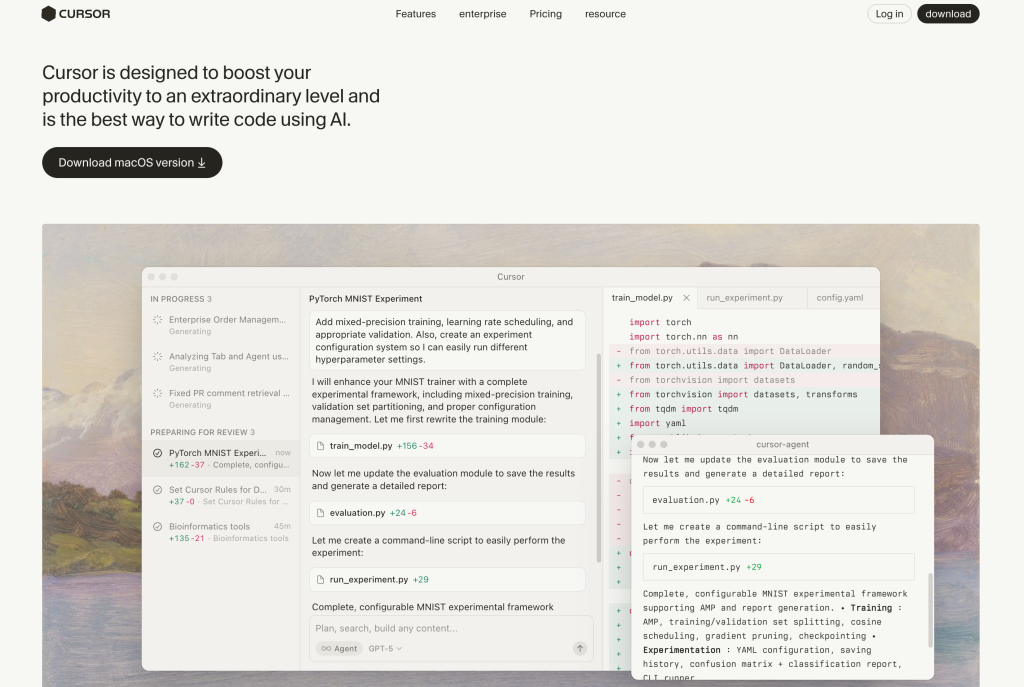

Cursor.ai is powerful, but its Pro subscription (using Claude 3.5 Sonnet or GPT-4) costs $20/month. Local models allow you to run AI directly on your own computer using tools like Ollama or LM Studio.

By connecting a local model to Cursor, you get:

- Zero Cost: No subscription fees or API usage costs.

- Privacy: Your code never leaves your computer.

- Offline Coding: Work without an internet connection.

How to Set Up Local Models in Cursor (Step-by-Step)

Step 1: Install Ollama

Ollama is currently the easiest way to run local LLMs.

- Go to ollama.com and download the installer for Windows, Mac, or Linux.

- Install and run the application.

Step 2: Download a Model

Open your terminal (Command Prompt or Terminal) and type one of the following commands to download a coding model:

- For DeepSeek (Highly Recommended):

ollama pull deepseek-r1:latest - For Llama 3:

ollama pull llama3 - For Codellama:

ollama pull codellama

Step 3: Configure Cursor Settings

This is the “trick” part where most people get stuck.

- Open Cursor.

- Click the Gear icon (Settings) in the top right -> Models.

- Scroll down to the “OpenAI API Key” section (Yes, we use this section even for local models).

- Click “Add model” and type the exact name of the model you just downloaded (e.g.,

deepseek-r1:latest). - Crucial Step: Look for “Override OpenAI Base URL”. Enter this URL:

http://localhost:11434/v1 - Turn on the switch to enable it.

Step 4: Verify It Works

- Open a chat in Cursor (

Cmd/Ctrl + L). - In the model dropdown menu, select your local model (e.g.,

deepseek-r1:latest). - Ask it to write a simple Python function. If it replies, congratulations! You are running fully local AI.